Service mesh products from Isovalent and Solo.io branched out this week beyond Kubernetes, but an underlying battle over the role of eBPF and the future of service mesh architectures raged on at KubeCon + CloudNativeCon Europe.

Isovalent, the commercial backers of the Cilium networking project based on the extended Berkeley Packet Filter (eBPF) Linux kernel utility, rolled out Cilium Mesh this week, a companion to the Cilium Service Mesh that links with resources outside Kubernetes. Not to be outdone, Istio service mesh platform vendor Solo.io launched Gloo Fabric, which supports multi-cloud network management for VM-based and serverless workloads in addition to containers and Kubernetes.

“The new Cilium Mesh from Isovalent addresses two significant areas of unfinished business in the container networking and service mesh space,” said Brad Casemore, an analyst at IDC. “First, it provides full-stack container networking, and second, it does so in a way that provides orchestrated multi-cluster and multi-cloud capabilities.”

Solo.io is taking a different path with Ambient Mesh, one that maintainers said supports stronger zero-trust security features.

“They’re essentially aiming for the same destination, and they should achieve similar outcomes,” Casemore said. “[Both are] rolling up and consolidating discrete elements and functions, providing some useful architectural simplification and streamlined operations in the process.”

It’s a sign that cloud-native technologies have become part of a much broader network automation and virtualization platform among enterprises, Casemore said.

The service mesh sidecar schism

Since the debut of Istio and Linkerd 2 in 2018, service meshes in the Kubernetes world have primarily consisted of a control plane that delegates network management to a fleet of components called sidecars, specialized containers that are injected into each Kubernetes pod. These sidecars handle automatic mutual TLS (mTLS) authentication and sophisticated routing functions that operate at a finer-grained level than traditional host- and VM-based network tools.

During the early days of service mesh, Cilium and eBPF occupied the separate realm of Kubernetes Container Network Interfaces (CNI), which were focused on Kubernetes cluster nodes rather than pods.

But the sidecar took a back seat when Cilium service mesh launched in beta in late 2021 and became generally available in Cilium 1.12 in July 2022. Cilium service mesh still uses proxies, but they are deployed on each Kubernetes node rather than within each pod. Proponents said this simplifies service mesh operations, provides more efficient network performance and maintains most of the same security characteristics as sidecar-based meshes.

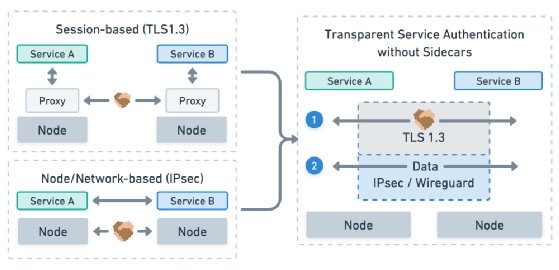

While Cilium service mesh does still support a Layer 7 proxy for certain features, it represents the most radical departure from the sidecar model by proposing a sidecarless approach to mTLS. This architecture separates the authentication mechanism’s handshake protocol from the network data payload.

“The way that Cilium service mesh is doing mTLS does not require the actual data and network traffic to go over TLS,” said Thomas Graf, founder of the Cilium project and CTO and co-founder of Isovalent, in an interview prior to KubeCon + CloudNativeCon EU.

He said mTLS with the service mesh model is a relatively good fit for applications that are TCP-only IPv4 only, such as gRPC and http — modern protocols.

“Enterprises are coming in with UDP, SCTP, multicast and all sorts of proprietary protocols. They also want mTLS, but they can’t have it, because mTLS has been built for the Internet, and in a proxy-based model only works for TCP,” Graf said.

Furthermore, separating network traffic from authentication handshakes offers security advantages, Graf said.

But this approach raised objections from the Linkerd camp, beginning with a blog post by Linkerd creator and Buoyant co-founder William Morgan in June 2022 that raised concerns about the security isolation and performance efficiency of eBPF-based shared proxies, a discussion that continued at KubeCon + CloudNativeCon North America in October.

“EBPF is one of a crop of recent kernel features like io_uring (which Linkerd uses heavily) that change the way that applications and the kernel interact … but it’s not a magic bullet,” Morgan wrote in June. “You cannot run arbitrary applications as eBPF.”

At KubeCon EU this week, Linkerd backers continued their criticism of the eBPF-oriented, sidecarless approach in a presentation by Zahari Dichev, software engineer at Buoyant.

Dichev pointed out that Linkerd’s creators saw something similar before, in the first version of Linkerd, which predated mainstream use of Linux containers and Kubernetes. Linkerd 1 used a shared host-based proxy, and Dichev warned that Cilium service mesh will encounter the same pitfalls of muddled troubleshooting, limited kernel-space performance and security risk.

Meanwhile, Solo.io and the Istio project espouse a third take on shared proxies with Istio Ambient Mesh, which uses a host-based proxy called Ztunnel for Layer 3 and 4 network routing features and mutual TLS tunneling, while preserving the Envoy sidecar proxy for Layer 7 networking features such as rate limiting and circuit breaking.

Like Linkerd, which supports the Cilium CNI for Layer 3 and 4 network functions, Istio and Ambient Mesh integrate with eBPF to get traffic into Kubernetes pods via zTunnel in an efficient way, said Louis Ryan, CTO at Solo.io. But Istio Ambient Mesh and Solo.io’s Gloo Platform don’t use the same method of splitting traffic from handshakes for mTLS.

“MTLS is not something you can do efficiently today in the kernel,” Ryan said. “You have to go to Ztunnel to do this work.”

Ztunnel also retains the zero-trust security isolation offered by sidecar-based service mesh, according to an Istio community blog post.

IT pros watch sidecar tennis match, but eBPF efficiency turns heads

Mainstream enterprises have yet to take the plunge with sidecarless service mesh, especially while Cilium service mesh and Istio Ambient Mesh are still at a relatively early stage of development. But they aren’t necessarily swayed by the security-focused arguments in favor of sidecars.

PostFinance, a financial services company in Berne, Switzerland, has been using the Cilium CNI for two years, because eBPF offers much more efficient network performance at Layers 3 and 4 than the Kubernetes-native kube-proxy mechanism. It hasn’t seen the need to add a service mesh to its network, but that’s not because of an aversion to sidecarless service mesh as a security risk, said Filip Nikolic, architect owner of Kubernetes at PostFinance, in an interview.

“To say it adds more of security risk than the other solutions isn’t fair at all, because every technology has at least a certain degree of risk,” Nikolic said, citing vulnerabilities in the Envoy sidecar that surfaced within the last year.

Linkerd maintainers argue that the sidecar provides stricter isolation between app workloads, which is true, Nikolic said — as is the argument that eBPF isn’t suited to performing complex applications, especially those that require stateful data management. But the eBPF community is also working on those limitations, according to Nikolic, and the isolation argument, to him, sounds all too familiar.

“It’s the exact same argument as when virtual machines came up — that it’s insecure [compared to physical machines], and yeah, there have been some issues there, but hardware manufacturers changed their CPUs and security improved,” he said. “And then containers came up, because virtual machines still take several minutes to start up, and there is less isolation there, but in essence, the technology in VMs and containers is good enough to be called safe.”

Sidecarless eBPF-based networks seem to be on the same continuum, where performance and efficiency advantages spur adoption, and security practices evolve accordingly, Nikolic said.

In general, mTLS has yet to be perfected in practice, regardless of whether sidecars are used to deliver it, added Andrey Rybka, head of CTO compute and cloud architecture at Bloomberg LP.

“Do I want mTLS? Absolutely,” Rybka said. “But the full life cycle isn’t always well-solved — we’ve seen issues with TLS certificates expiring on some major cloud services … there are some solutions, but sometimes we’re not able to renew certificates very well.”

Bloomberg uses a wide variety of cloud-native networking tech, from HashiCorp’s Consul for service discovery to a homegrown tool created 10 years ago called Business App Services. Thus, Rybka said, he doesn’t expect a zero-sum game between sidecars and sidecarless approaches in the long run.

Instead, choosing which open source service mesh project Bloomberg embraces in the future will depend more on community strength than technical approach, Rybka said.

“Istio potentially has a better maturity story around it,” he said. “It’s been around for a little longer [than Cilium service mesh] and has the backing of Google and others.”

Beth Pariseau, senior news writer at TechTarget, is an award-winning veteran of IT journalism. She can be reached at [email protected] or on Twitter @PariseauTT.