Nvidia unveiled its next-generation Blackwell graphics processing units (GPUs), which have 25 times better energy consumption and lower costs for tasks for AI processing.

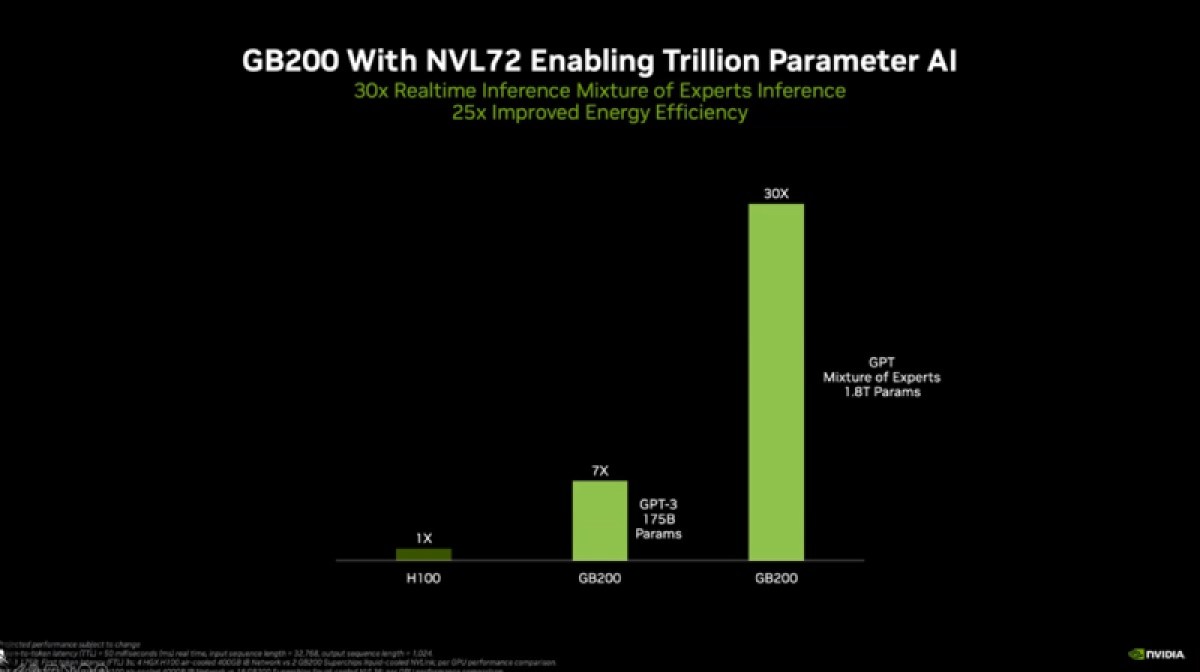

The Nvidia GB200 Grace Blackwell Superchip — meaning it consists of multiple chips in the same package — promises exceptional performance gains, providing up to 30 times performance increase for LLM inference workloads compared to previous iterations.

Speaking at Nvidia GTC 2024 in a keynote talk, Nvidia CEO Jensen Huang unveiled Blackwell to a crowd of thousands of engineers, saying it will herald a transformative era in computing. Gaming products are likely to be introduced later.

During the keynote, Huang joked that the prototypes he was holding were worth $10 billion and $5 billion. The chips were part of the Grace Blackwell system.

GB Event

GamesBeat Summit Call for Speakers

We’re thrilled to open our call for speakers to our flagship event, GamesBeat Summit 2024 hosted in Los Angeles, where we will explore the theme of “Resilience and Adaption”.

“For three decades we’ve pursued accelerated computing, with the goal of enabling transformative breakthroughs like deep learning and AI,” said Huang. “Generative AI is the defining technology of our time. Blackwell GPUs are the engine to power this new industrial revolution. Working with the most dynamic companies in the world, we will realize the promise of AI for every industry.”

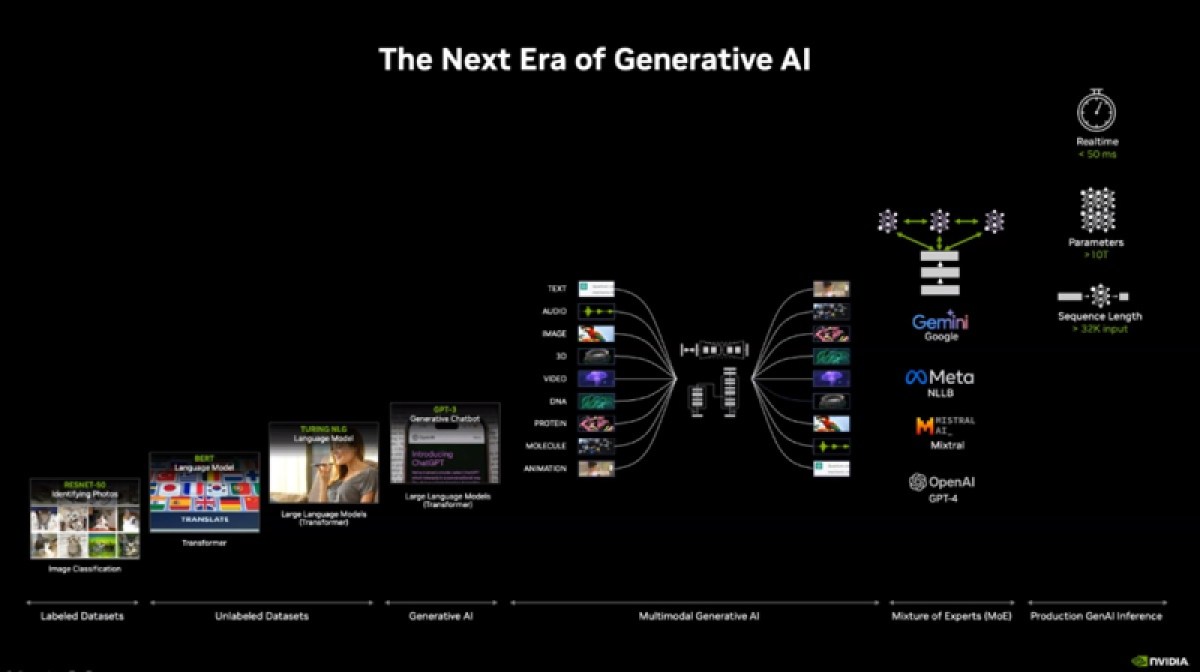

Regarding the specifics of the improvements, Nvidia said that Blackwell-based computers will enable organizations everywhere to build and run real-time generative AI on trillion-parameter large language models at 25 times less cost and energy consumption than its predecessor, Hopper. The processing will scale to AI models with up to 10 trillion parameters.

These numbers are important as Nvidia faces competition at the low end from the likes of inferencing chip designer Groq and the high end CPU vendors such as Cerebras — not to mention AMD and Intel. Groq is a Mountain View, California-based rival that focuses on chips for inferencing, as opposed to AI training.

Named in tribute to mathematician David Harold Blackwell, the first Black scholar inducted into the National Academy of Sciences, the Blackwell platform succeeds the Nvidia Hopper GPU architecture, setting new standards in accelerated computing.

Originally aimed at gaming graphics, GPUs are the main engine behind AI processing, and that has propelled Nvidia to a market capitalization of $2.2 trillion. It’s also why the likes of CNBC’s Jim Cramer are broadcasting live from Nvidia GTC, which has had a geeky past.

The platform introduces six pioneering technologies poised to unlock breakthroughs across various sectors, including data processing, engineering simulation, electronic design automation, computer-aided drug design, quantum computing, and generative AI.

The world’s most powerful chip

Huang said Blackwell will be the world’s most powerful chip. Featuring 208 billion transistors, Blackwell-architecture GPUs are manufactured using a custom-built, two-reticle limit 4NP TSMC process, facilitating a lot of processing power.

Blackwell features a second-generation transformer engine. Equipped with new micro-tensor scaling support and advanced dynamic range management algorithms, the Transformer Engine doubles compute and model sizes with innovative 4-bit floating point AI inference capabilities.

Nvidia also unveiled a fifth-generation NVLink networking technology. Enhancing performance for multitrillion-parameter AI models, the latest NVLink iteration delivers groundbreaking bidirectional throughput per GPU, fostering seamless high-speed communication.

NVLink delivers 1.8TB/s bidirectional throughput per GPU, ensuring seamless high-speed communication among up to 576 GPUs for today’s most complex LLMs.

And it has a RAS Engine. Ensuring reliability, availability, and serviceability, Blackwell-powered GPUs integrate dedicated engines and AI-based preventative maintenance capabilities to maximize system uptime and minimize operating costs.

It also has a secure AI solution. Advanced confidential computing capabilities safeguard AI models and customer data without compromising performance, catering to privacy-sensitive industries.

Accelerating database queries with support for the latest formats, the dedicated decompression engine enhances data analytics and data science performance, revolutionizing data processing.

This superchip forms the cornerstone of the NVIDIA GB200 NVL72, a rack-scale system boasting 1.4 exaflops of AI performance and 30TB of fast memory.

With widespread adoption anticipated across major cloud providers, server manufacturers, and leading AI companies, including Amazon, Google, Meta, Microsoft, and OpenAI, the Blackwell platform is poised to revolutionize computing across industries.

Blackwell will target computing customers in data processing, engineering simulation, electronic design automation, computer-aided drug design, quantum computing and generative AI — all emerging industry opportunities for Nvidia.

The Nvidia GB200 Grace Blackwell Superchip connects two Nvidia B200 Tensor Core GPUs to the Nvidia Grace CPU over a 900GB/s ultra-low-power chip-to-chip link. The GB200 Superchip provides up to a 30 times performance increase compared to the Nvidia H100 Tensor Core GPU for LLM inference workloads, and reduces cost and energy consumption by up to 25 times.

The GB200 is a key component of the Nvidia GB200 NVL72, a multi-node, liquid-cooled, rack-scale system for the most compute-intensive workloads. It combines 36 Grace Blackwell Superchips, which include 72 Blackwell GPUs and 36 Grace CPUs interconnected by fifth-generation NVLink.

Additionally, GB200 NVL72 includes Nvidia BlueField-3 data processing units to enable cloud network acceleration, composable storage, zero-trust security and GPU compute elasticity in hyperscale AI clouds. The platform acts as a single GPU with 1.4 exaflops of AI performance and 30TB of fast memory, and is a building block for the newest DGX SuperPOD.

Nvidia offers the HGX B200, a server board that links eight B200 GPUs through high-speed interconnects to support the world’s most powerful x86-based generative AI platforms. HGX B200 supports networking speeds up to 400Gb/s through the Nvidia Quantum-2 InfiniBand and Spectrum-X Ethernet networking

platforms.

GB200 will also be available on Nvidia DGX Cloud, an AI platform co-engineered with leading cloud service providers that gives enterprise developers dedicated access to the infrastructure and software needed to build and deploy advanced generative AI models. AWS, Google Cloud and Oracle Cloud Infrastructure plan to host new Nvidia Grace Blackwell-based instances later this year.

Cisco, Dell Technologies, Hewlett Packard Enterprise, Lenovo and Supermicro are expected to deliver a wide range of servers based on Blackwell products, as are Aivres, ASRock Rack, ASUS, Eviden, Foxconn, Gigabyte, Inventec, Pegatron, QCT, Wistron, Wiwynn and ZT Systems.

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.